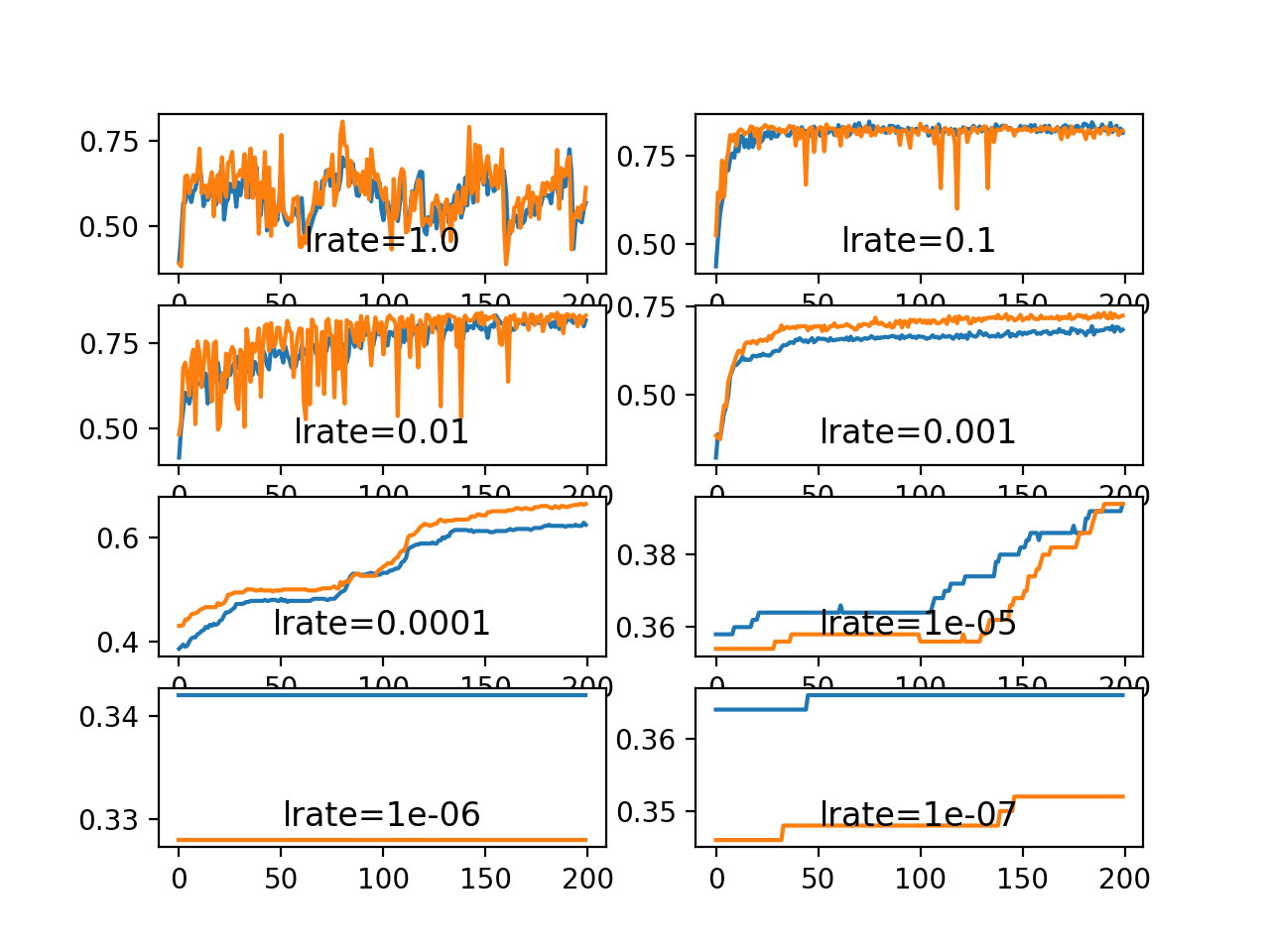

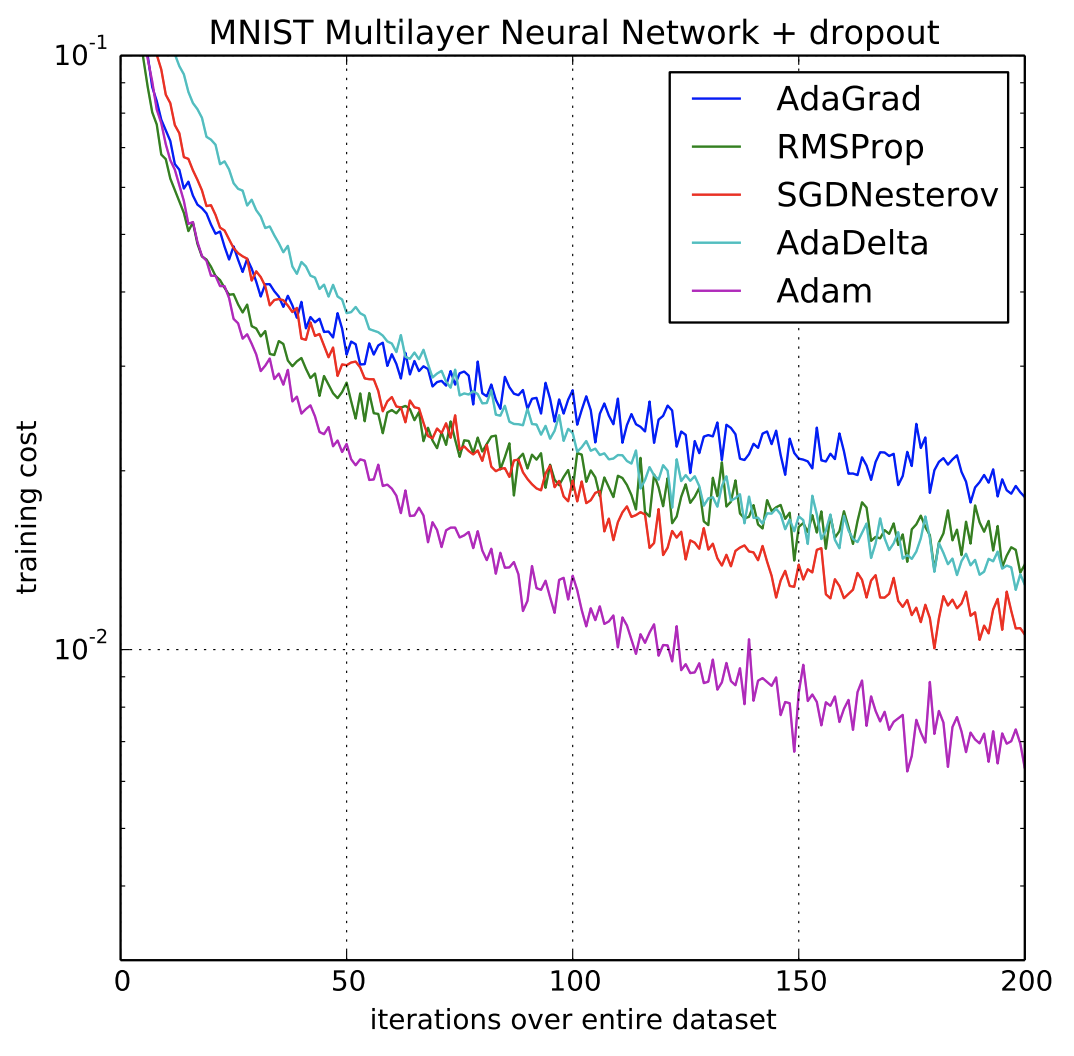

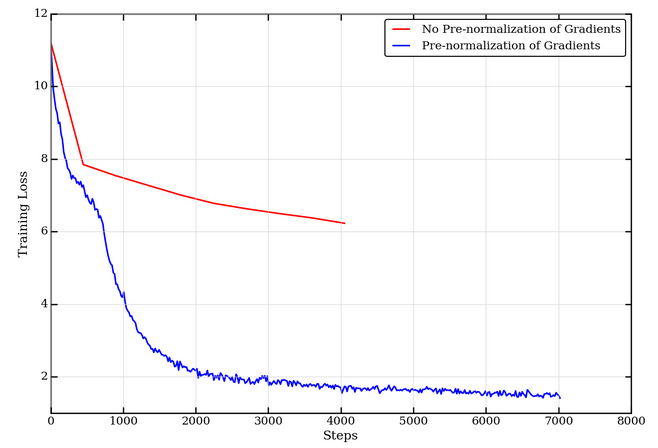

Adam is an effective gradient descent algorithm for ODEs. a Using a... | Download Scientific Diagram

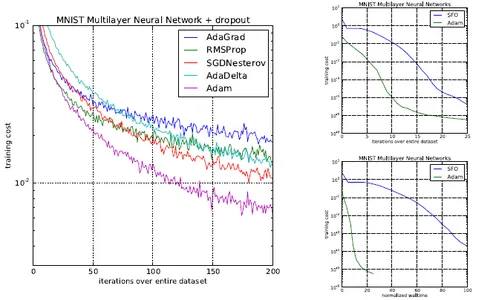

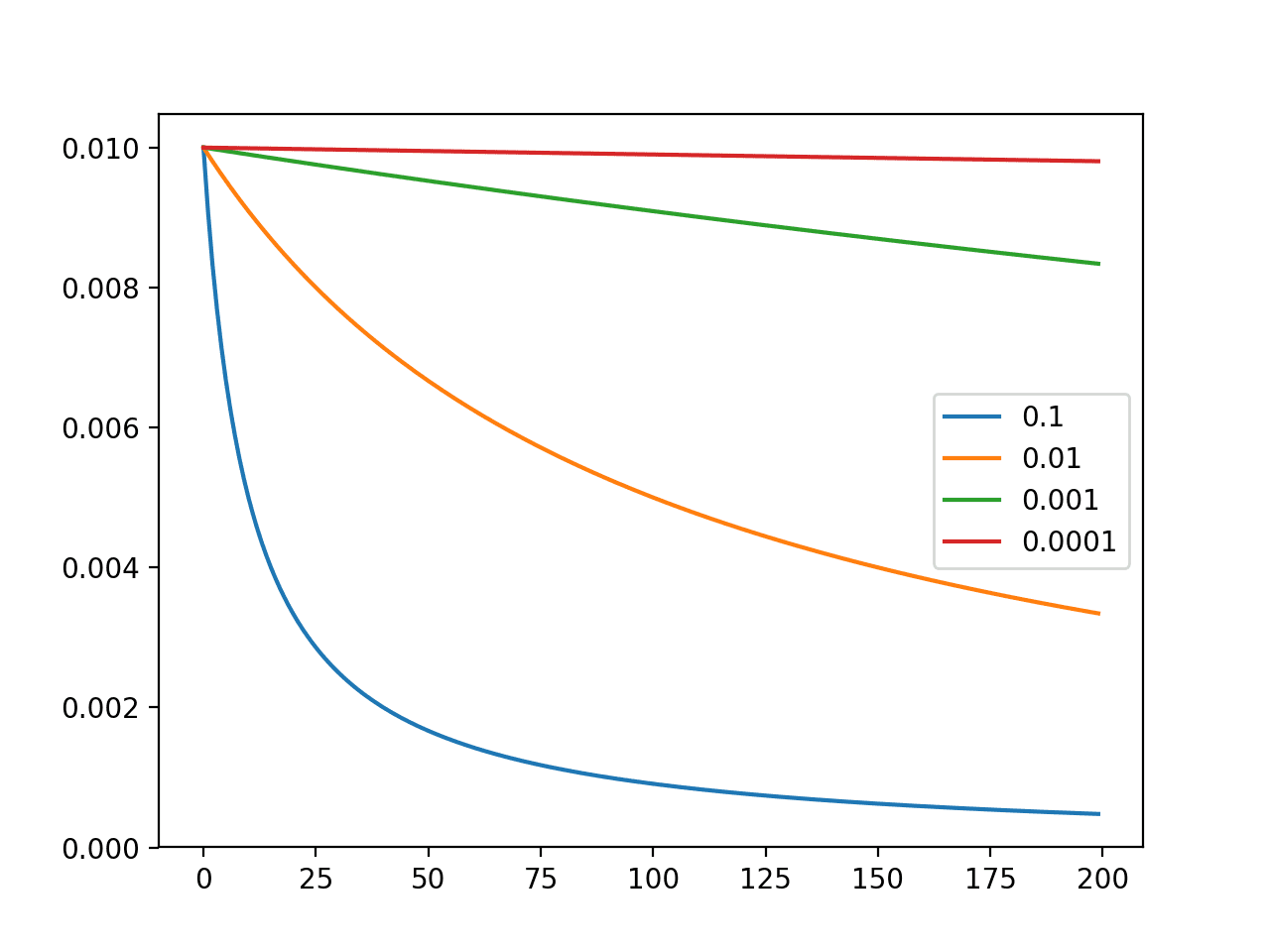

Understanding the AdaGrad Optimization Algorithm: An Adaptive Learning Rate Approach | by Brijesh Soni | Medium

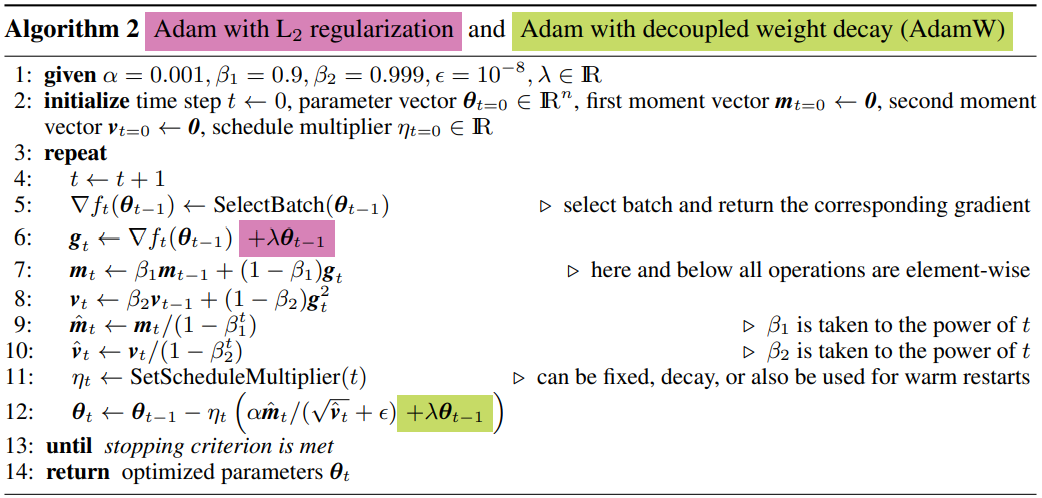

Why we call ADAM an a adaptive learning rate algorithm if the step size is a constant - Cross Validated

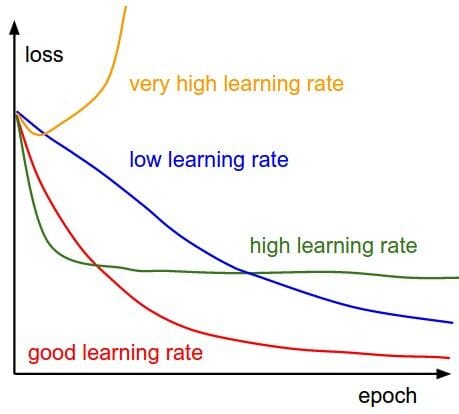

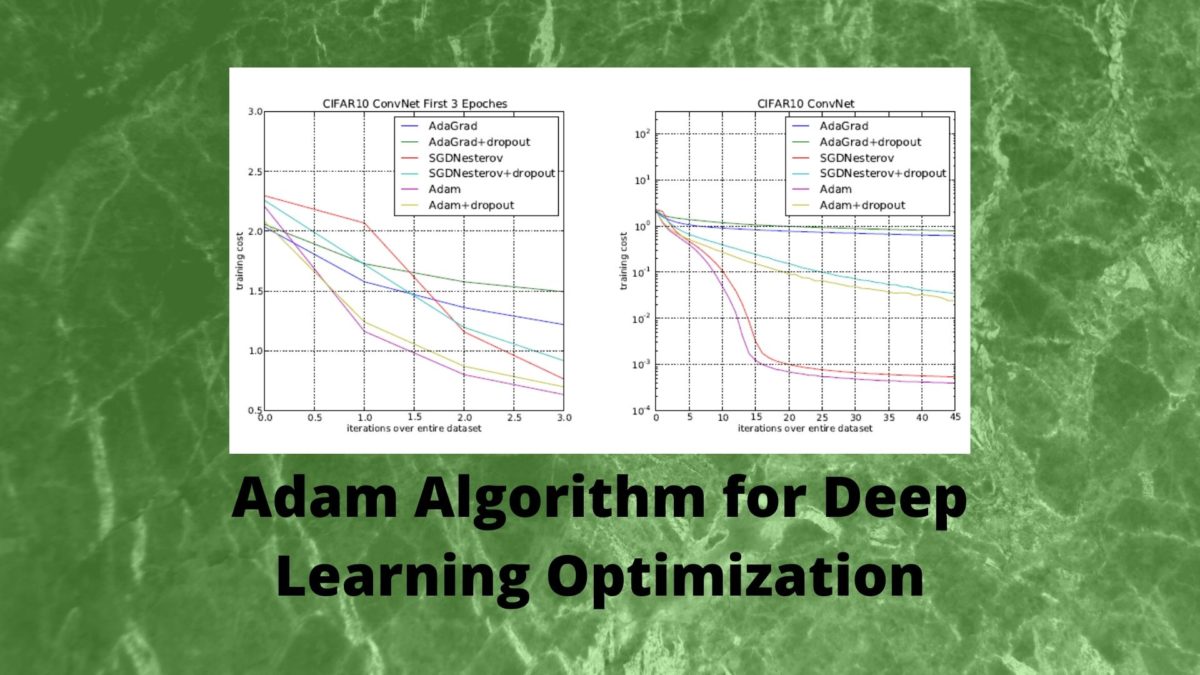

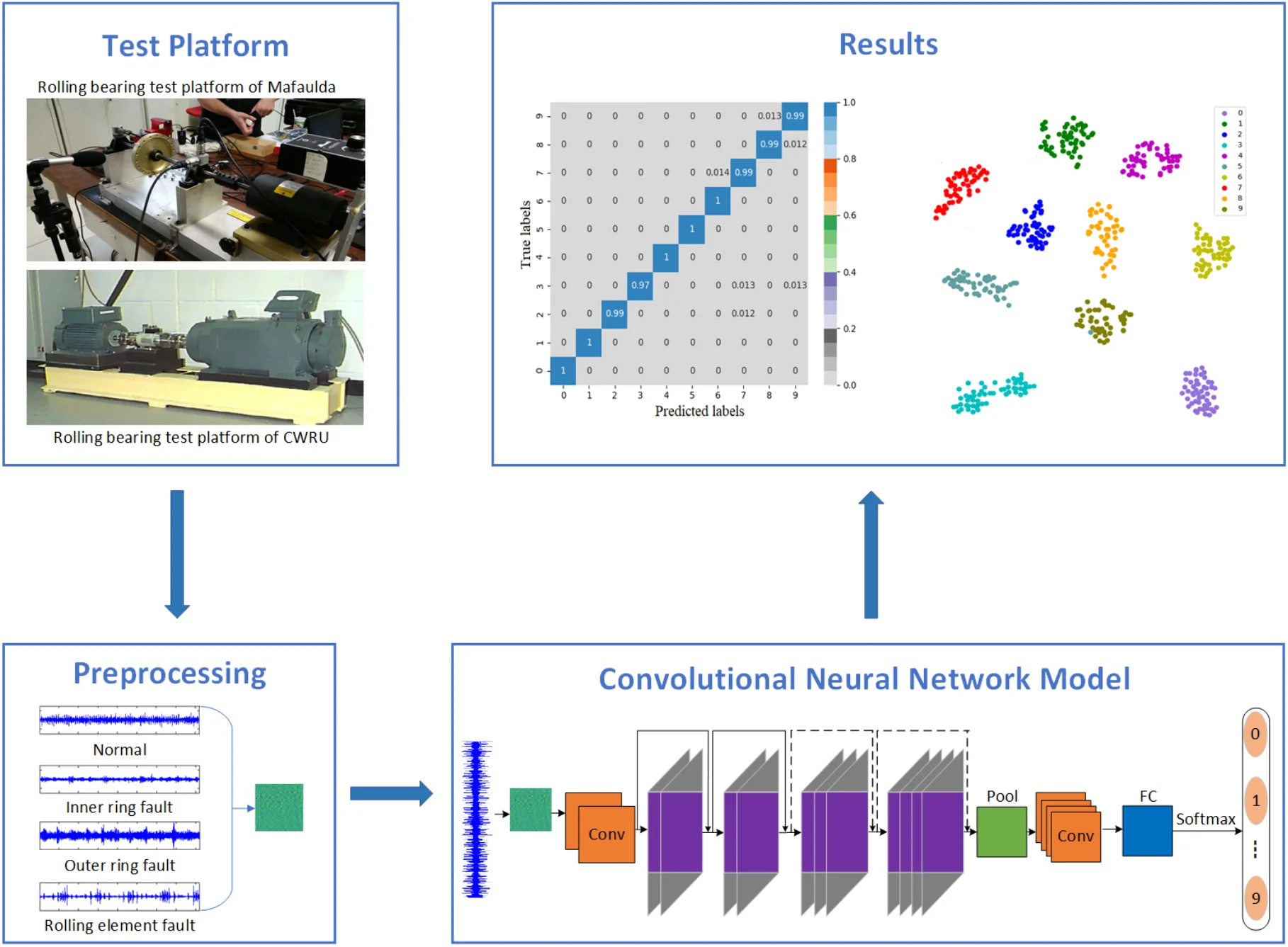

A convolutional neural network method based on Adam optimizer with power-exponential learning rate for bearing fault diagnosis - Extrica